Distributed Locking with Postgres Advisory Locks

TL;DR

Postgres Advisory Locks are a great solution for arbitrary application locks, particularly in scenarios where you are already using Postgres and have a need to block concurrent mutations to a resource (that resource DOES NOT have to be data in Postgres).

Concurrency is hard 😔

We ran into a problem last month around concurrent mutations of our credit ledger which lead us to adopt a really cool feature of Postgres.

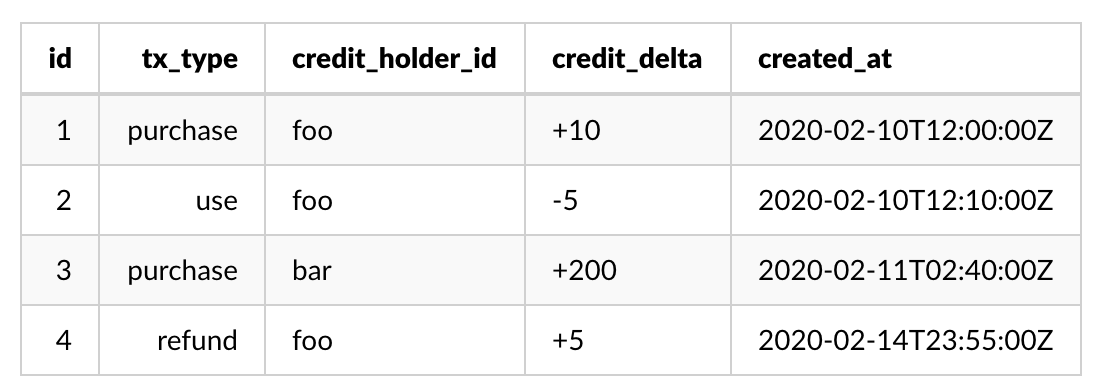

At Peachjar, we track the purchase, usage, and refund of credits as a log of transactions (e.g. a ledger). The ledger system is really just a table in Postgres and a microservice (with many deployments) that contain the rules for interacting with that table.

Image the table looking something like this:

We can calculate the current balance of a Credit Holder by aggregating the table:

SELECT sum(credit_delta) as balance

FROM ledger WHERE credit_holder_id = 'foo';

When a user wants to use their credits, we first check the ledger for their current balance. If they have enough credits, we can then make an entry in the ledger indicating they have spent those credits:

const balance = await getCreditBalance(creditHolderId)

if (balance < numberOfCreditsToUse) {

return Respond.invalid(

new NotEnoughCreditsError(

balance,

numberOfCreditsToUse

)

)

}

const updatedBalance = await useCredits(

creditHolderId,

numberOfCreditsToUse

)

return Respond.success({

creditHolderId,

updatedBalance,

creditsUsed: numberOfCreditsToUse,

})

Respondis an analog to how our microservices use structured responses when communicating with each other.

Race Time! 🏎️ 🏎️ 🏎️ 🏎️

This strategy is pretty sane when you have a single process, but it is easy to abuse in a distributed environment.

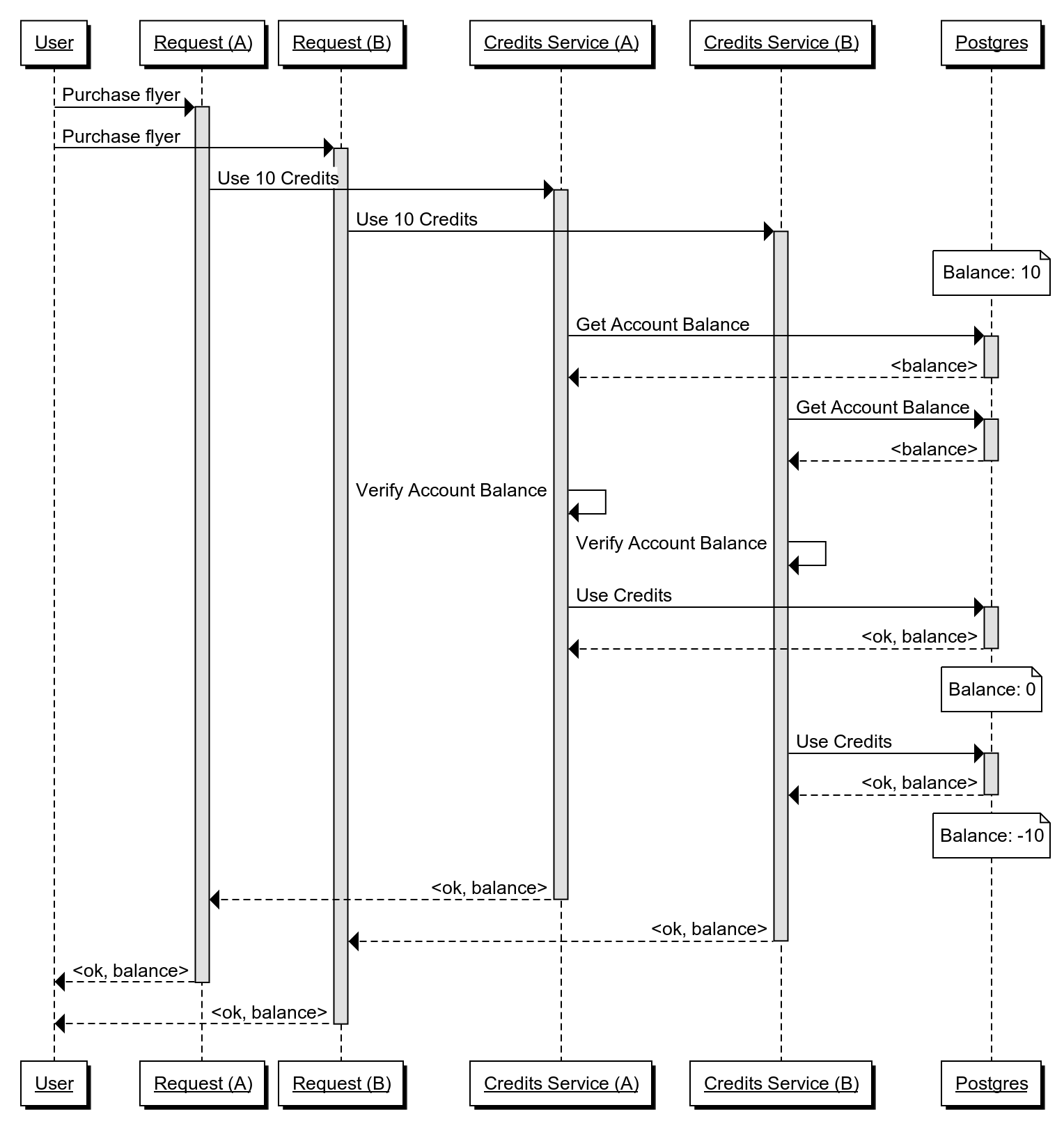

Take the following scenario:

The user executes two requests within a really short time of each other. This can happen a number of ways:

- Press the purchase button twice (and the button didn't disable after the first push).

- The user refreshes the page after a form submittal (classic replay of a POST).

- The user is malicious and purposely executes two concurrent purchase requests.

- A timeout is reached and a retry is executed (this was our actual problem).

Request A is sent to one instance of the Credit Service, which verifies the credit balance and uses the requested credits. However, while Request A is checking the balance, Request B is received. If Request B's balance check is executed before Request A uses credits, Request B will also succeed in using credits.

The Problem

In this scenario, it's clear to see that our system is brittle. We lack the ability to ensure concurrent transactions mutate the ledger according to our business rules. This problem is compounded by the nature of distributed systems -- we have multiple processes that can receive requests and we will have coordinate transactions across those services.

The Classic Solution

Since we are using a relational database, the classic solution is "row" and "table" locks. Let's exclude "row" locks immediately. Our ledger is append-only, so rows aren't updated; instead, entries are inserted to represent the state change. This means there is no row to lock.

Table locks are a completely valid solution:

BEGIN WORK;

LOCK TABLE ledger IN EXCLUSIVE MODE;

SELECT sum(credit_delta) as balance

FROM ledger WHERE credit_holder_id = 'foo';

INSERT INTO ledger (tx_type, credit_holder_id, credit_delta)

VALUES ('use', 'foo', -10);

COMMIT WORK;

Note: I am not an expert on Postgres Locks, so it's possible I used the wrong lock mode or that there might be a better approach.

Problems with Table Locks

While this approach might seem simple enough, I see two major problems with it:

1. The entire table is locked creating a scalability issue.

By locking the entire table, we've indiscriminately blocked other credit holders from performing transactions. With tens of thousands of users on the system at any given time, this becomes a major issue for us.

2. Locks are ugly to handle in code (leaky abstraction).

Let's modify our previous example to demonstrate this problem:

let response: Response

const tx = await createLockingTransaction()

try {

const balance = await getCreditBalance(tx, creditHolderId)

if (balance < numberOfCreditsToUse) {

response = Respond.invalid(

new NotEnoughCreditsError(

balance,

numberOfCreditsToUse

)

)

} else {

const updatedBalance = await useCredits(

creditHolderId,

numberOfCreditsToUse

)

response = Respond.success({

creditHolderId,

updatedBalance,

creditsUsed: numberOfCreditsToUse,

})

}

} catch (error) {

tx.rollback()

response = Respond.internalError(error)

} finally {

if (!tx.rolledBack) tx.commit()

}

return response

Part of the problem with locks is the need to execute them within a transaction. In fact, this is a general issue with backend software that relies on data stores that lock -- how can you create a clean repository abstraction when multiple repository methods have to coordinate their actions?

Some frameworks/languages have come up with clever ways to handle this. In Java, the Spring Framework had a couple of cool tricks to hide "transaction passing" from components:

- Thread Local variables [1], which would store the transaction state on the request thread.

- Aspected Oriented Programming [2] - dynamically rewrite repository methods to inject the transaction context into them.

- Request-scoped Dependency Injection [3] - the DI system creates the transaction and repository methods when the request is received.

Thread-local variables are unavailable to Node.js (given we only have one event-loop thread). You could potentially write a framework to do AOP in JavaScript/TypeScript, and you can even do request-scoped DI in frameworks like Awilix and Inversify.

Even if we could use an approach to hide "transaction passing" from domain code, relying on a transaction is still not an ideal approach for establishing a lock. What happens when you need to partially commit data? For instance, what if we register a credit card purchase, but then fail to add the credits to the ledger? The purchase still happened -- we can't allow the transaction to rollback because the registration of the credits failed. So transaction granularity is also an issue.

The Ideal Solution

I've talked about what I don't like already, but that's probably not very helpful. So the question is, "what is the ideal solution?"

Wouldn't it be great if,

- The system would prevent simultaneous mutations for a single credit holder.

- The approach would be effective across microservice instances.

- There would be no impact on the business logic of my code.

Specialized locking systems are actually quite common in large enterprises to solve this specific use case. In fact, you might actually use a few of the open-source varieties already: Consul, ZooKeeper, and etcd (though you might not use their locking functionality).

If we were to rewrite our code to use a locking mechanism, it might look something like this:

let lock: Lock

try {

lock = await acquireLock(creditHolderId)

} catch (error) {

return Respond.unavailable(error)

}

const balance = await getCreditBalance(creditHolderId)

if (balance < numberOfCreditsToUse) {

return Respond.invalid(

new NotEnoughCreditsError(

balance,

numberOfCreditsToUse

)

)

}

const updatedBalance = await useCredits(

creditHolderId,

numberOfCreditsToUse

)

lock.unlock().catch(error => console.error('Failed to unlock', error))

return Respond.success({

creditHolderId,

updatedBalance,

creditsUsed: numberOfCreditsToUse,

})

Not the prettiest code in the world, but in my opinion, it's cleaner than passing/relying on transactions to prevent concurrent mutations. Another important property of this approach is that it's not DB dependent. You could use this approach to prevent multiple calls to external systems, simulating the locking of traditionally "unlockable" resources (like S3 or Stripe).

In fact, our solution at Peachjar takes this approach a step further by integrating locks into our Peachjar Service Framework (PSF):

// This what a PSF handler looks like. The request protocol

// could be a NATS message or HTTP request.

export default {

lock: {

key: 'ledger:{{event.credit_holder_id}}',

},

aysnc handle(

context: Context<PurchaseRequest>,

{ getCreditBalance, useCredits }: Deps

) {

const balance = await getCreditBalance(creditHolderId)

if (balance < numberOfCreditsToUse) {

return Respond.invalid(

new NotEnoughCreditsError(

balance,

numberOfCreditsToUse

)

)

}

const updatedBalance = await useCredits(

creditHolderId,

numberOfCreditsToUse

)

return Respond.success({

creditHolderId,

updatedBalance,

creditsUsed: numberOfCreditsToUse,

})

}

}

In the example above, we use middleware to create a lock based on the incoming request and unlock the resource when the request is completed (or errors).

Choosing Postgres as a Lock Implementation

I would love to say we did an in-depth survey of lock implementations and chose a particular solution based on those findings. The reality is, we discovered our solution investigating Postgres table locks.

Postgres includes a really cool feature called Advisory Locks. An "advisory lock" is simply a tool/API provided by Postgres to create arbitrary locks that can be acquired by applications. These locks, however, are not enforced in any meaningful way by the database -- it's up to application code to give them meaning (the same way any other non-database distributed lock would work).

Postgres is a real win for us because we already use it for the ledger (and it's managed by AWS). However, I'm certain other lock solutions would have worked equally as well.

There are two types of Postgres Advisory Locks: session and transaction.

Session Locks

Session locks, once acquired, are held until they are manually released or the database client is disconnected:

-- Acquire a lock using an arbitrary BigInt

SELECT pg_advisory_lock(123);

-- Unlock

SELECT pg_advisory_unlock(123);

If pg_advisory_lock fails to acquire a lock, an error will be returned to the client. Postgres offers another function that will return a boolean value as to whether lock acquisition succeeded:

SELECT pg_try_advisory_lock(123);

-- return "true" is the lock is acquired, "false" if not.

Transaction Locks

Transactions locks differ only in that they are released at the end of a transaction. This is the preferred method for obtaining Advisory Locks. It's unlikely you will hold a transaction open indefinitely, whereas, it's extremely likely a connection will be held for hours (if not days or months).

Transaction Advisory Locks differ only in _xact on function names:

SELECT pg_advisory_xact_lock(123);

SELECT pg_try_advisory_xact_lock(123);

SELECT pg_advisory_xact_unlock(123);

Read the Docs

There are a couple of convenience functions related to Postgres Advisory Locks. I encourage you to read the documentation to get a better sense of how they can be used.

Conclusion

We were able to limit concurrent mutations to our ledger using Postgres Advisory locks. Our framework acquires these locks using before/after middleware, enabling us to avoid leaking database details into handlers. Postgres Advisory Locks also allow us to isolate our lock boundaries to a credit holder instead of having to lock the entire ledger table. Finally, by using the "transactional" version of Advisory Locks, we ensure a faulty code does not permanently lock entities by reducing the lifetime in which those locks can be held.

Addendum - Converting String to BigInt

You might have noticed that we were using String keys for our credit holders instead of BigInt. If you need to perform this kind of conversion, there is a great library to do it: GitHub - darkskyapp/string-hash. I've modified that code slightly to speed up the performance of the calculation (based on an issue in that repo):

export default function hashStringToInt(str: string): number {

let hash = 5381

let i = -1

while (i < str.length - 1) {

i += 1

hash = (hash * 33) ^ str.charCodeAt(i)

}

return hash >>> 0

}

References:

[1]. Spring and Threads: Transactions - DZone Java

[2]. Spring AOP transaction management in Hibernate – Mkyong.com

[3]. Quick Guide to Spring Bean Scopes | Baeldung

You might also be interested in these articles...

Stumbling my way through the great wastelands of enterprise software development.